- Published on

Mastering Large Language Models: Architecture, Training, and Applications

3 min read

- Authors

- Name

- Santosh Luitel

Table of Contents

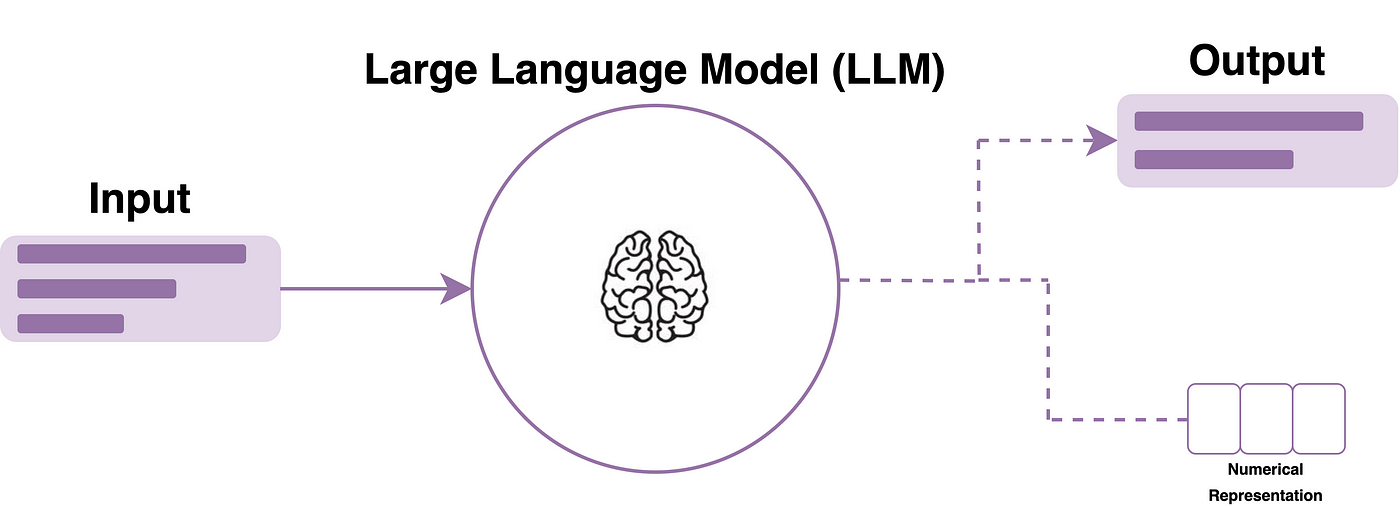

Welcome to this hands‑on deep dive into Large Language Models (LLMs). By the end of this tutorial, you’ll understand:

- 🔍 The Transformer backbone

- 🎯 The self‑attention mechanism

- ⚙️ Pre‑training vs. fine‑tuning workflows

- 🚀 Core applications powering modern AI

- ⚠️ Key challenges and mitigation strategies

- 🔮 Future directions in LLM research

1. Transformer Backbone

The Transformer (Vaswani et al., 2017) revolutionized NLP by replacing recurrence with parallel self‑attention. Its main components:

┌───────────────┐ ┌──────────────────────┐

│ Input Tokens │──►──▶│ Token Embeddings + │

│ (words/ids) │ │ Positional Encodings │

└───────────────┘ └──────────────────────┘

│

▼

┌───────────────────┐

│ Multi‑Head │

│ Self‑Attention │

└───────────────────┘

│

▼

┌───────────────────┐

│ Feed‑Forward │

│ Network (MLP) │

└───────────────────┘

│

▼

┌───────────────────┐

│ Residual + │

│ LayerNorm │

└───────────────────┘

│

▼

… repeated N layers …

│

▼

Transformer Output

2. Self‑Attention Mechanism

At the heart of the Transformer is scaled dot‑product attention:

Attention(Q, K, V) = softmax( (Q · Kᵀ) / √dₖ ) · V

- Q, K, V are linear projections of the input embeddings.

- Scaling by √dₖ prevents extreme gradients.

- The softmax weights capture context‑dependent token importance.

3. Pre‑training & Fine‑tuning

Pre‑training

Objective:

- Autoregressive (e.g., GPT): predict next token

- Masked LM (e.g., BERT): predict masked tokens

Data: Trillions of tokens from web crawl, books, code

Compute: Distributed GPU/TPU clusters, optimized with DeepSpeed or Megatron

Fine‑tuning

- Supervised Tasks: classification, Q&A, summarization

- Instruction Tuning: prompt–response pairs to shape model behavior

- RLHF (Reinforcement Learning from Human Feedback): align outputs with human preferences

4. Core Applications

LLMs power a variety of real‑world capabilities:

- 🤖 Conversational AI: chatbots, virtual assistants

- ✍️ Content Generation: articles, stories, marketing copy

- 💻 Code Assistance: autocomplete, snippet generation, bug fixes

- 🔍 Knowledge Retrieval: document search, RAG systems

- 🌐 Translation & Summarization: zero‑shot and few‑shot performance

5. Key Challenges & Mitigations

Hallucinations

- Models may generate plausible yet incorrect content.

- Mitigation: retrieval grounding, answer verification.

Bias & Fairness

- Training data biases can propagate to outputs.

- Mitigation: dataset audits, fairness‑aware fine‑tuning.

Compute & Carbon Footprint

- Training LLMs is energy‑intensive.

- Mitigation: model distillation, quantization, efficient architectures.

Privacy & Security

- Risk of memorizing sensitive data.

- Mitigation: differential privacy, on‑device inference.

6. Future Directions

- 🔗 Multimodal Models: unify text, vision, audio in a single architecture

- 📱 Edge Deployment: pruned & quantized LLMs for mobile/IoT devices

- 🔄 Continual Learning: incremental updates without full retraining

- 🔒 Stronger Alignment: advanced RLHF, interpretability, and safety research

LLMs have transformed natural language processing. With this foundation, you’re ready to explore custom LLM architectures, optimize training workflows, or build the next generation of AI applications. Happy coding!